Jingjing Wang

Image Processing Center, Beihang University, Beijing, China

A Large-Scale Dataset for Molecular Structure-Language Description via a Rule-Regularized Method

Feb 02, 2026Abstract:Molecular function is largely determined by structure. Accurately aligning molecular structure with natural language is therefore essential for enabling large language models (LLMs) to reason about downstream chemical tasks. However, the substantial cost of human annotation makes it infeasible to construct large-scale, high-quality datasets of structure-grounded descriptions. In this work, we propose a fully automated annotation framework for generating precise molecular structure descriptions at scale. Our approach builds upon and extends a rule-based chemical nomenclature parser to interpret IUPAC names and construct enriched, structured XML metadata that explicitly encodes molecular structure. This metadata is then used to guide LLMs in producing accurate natural-language descriptions. Using this framework, we curate a large-scale dataset of approximately $163$k molecule-description pairs. A rigorous validation protocol combining LLM-based and expert human evaluation on a subset of $2,000$ molecules demonstrates a high description precision of $98.6\%$. The resulting dataset provides a reliable foundation for future molecule-language alignment, and the proposed annotation method is readily extensible to larger datasets and broader chemical tasks that rely on structural descriptions.

LightCity: An Urban Dataset for Outdoor Inverse Rendering and Reconstruction under Multi-illumination Conditions

Feb 01, 2026Abstract:Inverse rendering in urban scenes is pivotal for applications like autonomous driving and digital twins. Yet, it faces significant challenges due to complex illumination conditions, including multi-illumination and indirect light and shadow effects. However, the effects of these challenges on intrinsic decomposition and 3D reconstruction have not been explored due to the lack of appropriate datasets. In this paper, we present LightCity, a novel high-quality synthetic urban dataset featuring diverse illumination conditions with realistic indirect light and shadow effects. LightCity encompasses over 300 sky maps with highly controllable illumination, varying scales with street-level and aerial perspectives over 50K images, and rich properties such as depth, normal, material components, light and indirect light, etc. Besides, we leverage LightCity to benchmark three fundamental tasks in the urban environments and conduct a comprehensive analysis of these benchmarks, laying a robust foundation for advancing related research.

Prior-Guided DETR for Ultrasound Nodule Detection

Jan 05, 2026Abstract:Accurate detection of ultrasound nodules is essential for the early diagnosis and treatment of thyroid and breast cancers. However, this task remains challenging due to irregular nodule shapes, indistinct boundaries, substantial scale variations, and the presence of speckle noise that degrades structural visibility. To address these challenges, we propose a prior-guided DETR framework specifically designed for ultrasound nodule detection. Instead of relying on purely data-driven feature learning, the proposed framework progressively incorporates different prior knowledge at multiple stages of the network. First, a Spatially-adaptive Deformable FFN with Prior Regularization (SDFPR) is embedded into the CNN backbone to inject geometric priors into deformable sampling, stabilizing feature extraction for irregular and blurred nodules. Second, a Multi-scale Spatial-Frequency Feature Mixer (MSFFM) is designed to extract multi-scale structural priors, where spatial-domain processing emphasizes contour continuity and boundary cues, while frequency-domain modeling captures global morphology and suppresses speckle noise. Furthermore, a Dense Feature Interaction (DFI) mechanism propagates and exploits these prior-modulated features across all encoder layers, enabling the decoder to enhance query refinement under consistent geometric and structural guidance. Experiments conducted on two clinically collected thyroid ultrasound datasets (Thyroid I and Thyroid II) and two public benchmarks (TN3K and BUSI) for thyroid and breast nodules demonstrate that the proposed method achieves superior accuracy compared with 18 detection methods, particularly in detecting morphologically complex nodules.The source code is publicly available at https://github.com/wjj1wjj/Ultrasound-DETR.

Nodule-DETR: A Novel DETR Architecture with Frequency-Channel Attention for Ultrasound Thyroid Nodule Detection

Jan 05, 2026Abstract:Thyroid cancer is the most common endocrine malignancy, and its incidence is rising globally. While ultrasound is the preferred imaging modality for detecting thyroid nodules, its diagnostic accuracy is often limited by challenges such as low image contrast and blurred nodule boundaries. To address these issues, we propose Nodule-DETR, a novel detection transformer (DETR) architecture designed for robust thyroid nodule detection in ultrasound images. Nodule-DETR introduces three key innovations: a Multi-Spectral Frequency-domain Channel Attention (MSFCA) module that leverages frequency analysis to enhance features of low-contrast nodules; a Hierarchical Feature Fusion (HFF) module for efficient multi-scale integration; and Multi-Scale Deformable Attention (MSDA) to flexibly capture small and irregularly shaped nodules. We conducted extensive experiments on a clinical dataset of real-world thyroid ultrasound images. The results demonstrate that Nodule-DETR achieves state-of-the-art performance, outperforming the baseline model by a significant margin of 0.149 in mAP@0.5:0.95. The superior accuracy of Nodule-DETR highlights its significant potential for clinical application as an effective tool in computer-aided thyroid diagnosis. The code of work is available at https://github.com/wjj1wjj/Nodule-DETR.

Shared Spatial Memory Through Predictive Coding

Nov 06, 2025

Abstract:Sharing and reconstructing a consistent spatial memory is a critical challenge in multi-agent systems, where partial observability and limited bandwidth often lead to catastrophic failures in coordination. We introduce a multi-agent predictive coding framework that formulate coordination as the minimization of mutual uncertainty among agents. Instantiated as an information bottleneck objective, it prompts agents to learn not only who and what to communicate but also when. At the foundation of this framework lies a grid-cell-like metric as internal spatial coding for self-localization, emerging spontaneously from self-supervised motion prediction. Building upon this internal spatial code, agents gradually develop a bandwidth-efficient communication mechanism and specialized neural populations that encode partners' locations: an artificial analogue of hippocampal social place cells (SPCs). These social representations are further enacted by a hierarchical reinforcement learning policy that actively explores to reduce joint uncertainty. On the Memory-Maze benchmark, our approach shows exceptional resilience to bandwidth constraints: success degrades gracefully from 73.5% to 64.4% as bandwidth shrinks from 128 to 4 bits/step, whereas a full-broadcast baseline collapses from 67.6% to 28.6%. Our findings establish a theoretically principled and biologically plausible basis for how complex social representations emerge from a unified predictive drive, leading to social collective intelligence.

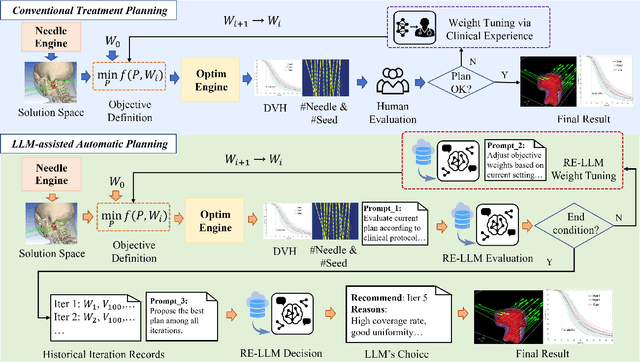

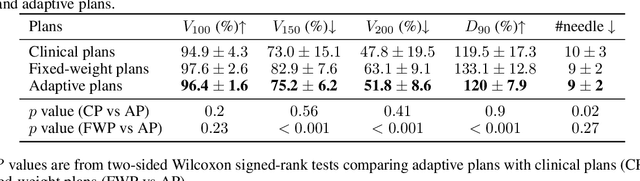

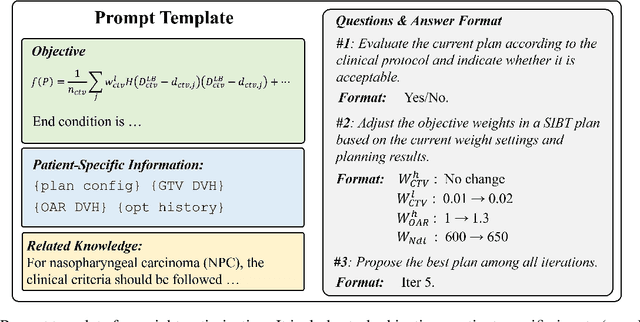

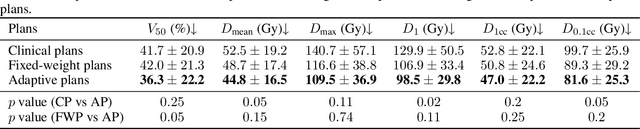

An Iterative LLM Framework for SIBT utilizing RAG-based Adaptive Weight Optimization

Sep 10, 2025

Abstract:Seed implant brachytherapy (SIBT) is an effective cancer treatment modality; however, clinical planning often relies on manual adjustment of objective function weights, leading to inefficiencies and suboptimal results. This study proposes an adaptive weight optimization framework for SIBT planning, driven by large language models (LLMs). A locally deployed DeepSeek-R1 LLM is integrated with an automatic planning algorithm in an iterative loop. Starting with fixed weights, the LLM evaluates plan quality and recommends new weights in the next iteration. This process continues until convergence criteria are met, after which the LLM conducts a comprehensive evaluation to identify the optimal plan. A clinical knowledge base, constructed and queried via retrieval-augmented generation (RAG), enhances the model's domain-specific reasoning. The proposed method was validated on 23 patient cases, showing that the LLM-assisted approach produces plans that are comparable to or exceeding clinically approved and fixed-weight plans, in terms of dose homogeneity for the clinical target volume (CTV) and sparing of organs at risk (OARs). The study demonstrates the potential use of LLMs in SIBT planning automation.

EndoUFM: Utilizing Foundation Models for Monocular depth estimation of endoscopic images

Aug 25, 2025Abstract:Depth estimation is a foundational component for 3D reconstruction in minimally invasive endoscopic surgeries. However, existing monocular depth estimation techniques often exhibit limited performance to the varying illumination and complex textures of the surgical environment. While powerful visual foundation models offer a promising solution, their training on natural images leads to significant domain adaptability limitations and semantic perception deficiencies when applied to endoscopy. In this study, we introduce EndoUFM, an unsupervised monocular depth estimation framework that innovatively integrating dual foundation models for surgical scenes, which enhance the depth estimation performance by leveraging the powerful pre-learned priors. The framework features a novel adaptive fine-tuning strategy that incorporates Random Vector Low-Rank Adaptation (RVLoRA) to enhance model adaptability, and a Residual block based on Depthwise Separable Convolution (Res-DSC) to improve the capture of fine-grained local features. Furthermore, we design a mask-guided smoothness loss to enforce depth consistency within anatomical tissue structures. Extensive experiments on the SCARED, Hamlyn, SERV-CT, and EndoNeRF datasets confirm that our method achieves state-of-the-art performance while maintaining an efficient model size. This work contributes to augmenting surgeons' spatial perception during minimally invasive procedures, thereby enhancing surgical precision and safety, with crucial implications for augmented reality and navigation systems.

Large Deviations for Sequential Tests of Statistical Sequence Matching

Jun 04, 2025Abstract:We revisit the problem of statistical sequence matching initiated by Unnikrishnan (TIT 2015) and derive theoretical performance guarantees for sequential tests that have bounded expected stopping times. Specifically, in this problem, one is given two databases of sequences and the task is to identify all matched pairs of sequences. In each database, each sequence is generated i.i.d. from a distinct distribution and a pair of sequences is said matched if they are generated from the same distribution. The generating distribution of each sequence is \emph{unknown}. We first consider the case where the number of matches is known and derive the exact exponential decay rate of the mismatch (error) probability, a.k.a. the mismatch exponent, under each hypothesis for optimal sequential tests. Our results reveal the benefit of sequentiality by showing that optimal sequential tests have larger mismatch exponent than fixed-length tests by Zhou \emph{et al.} (TIT 2024). Subsequently, we generalize our achievability result to the case of unknown number of matches. In this case, two additional error probabilities arise: false alarm and false reject probabilities. We propose a corresponding sequential test, show that the test has bounded expected stopping time under certain conditions, and characterize the tradeoff among the exponential decay rates of three error probabilities. Furthermore, we reveal the benefit of sequentiality over the two-step fixed-length test by Zhou \emph{et al.} (TIT 2024) and propose an one-step fixed-length test that has no worse performance than the fixed-length test by Zhou \emph{et al.} (TIT 2024). When specialized to the case where either database contains a single sequence, our results specialize to large deviations of sequential tests for statistical classification, the binary case of which was recently studied by Hsu, Li and Wang (ITW 2022).

Task-Oriented Communications for Visual Navigation with Edge-Aerial Collaboration in Low Altitude Economy

Apr 29, 2025Abstract:To support the Low Altitude Economy (LAE), precise unmanned aerial vehicles (UAVs) localization in urban areas where global positioning system (GPS) signals are unavailable. Vision-based methods offer a viable alternative but face severe bandwidth, memory and processing constraints on lightweight UAVs. Inspired by mammalian spatial cognition, we propose a task-oriented communication framework, where UAVs equipped with multi-camera systems extract compact multi-view features and offload localization tasks to edge servers. We introduce the Orthogonally-constrained Variational Information Bottleneck encoder (O-VIB), which incorporates automatic relevance determination (ARD) to prune non-informative features while enforcing orthogonality to minimize redundancy. This enables efficient and accurate localization with minimal transmission cost. Extensive evaluation on a dedicated LAE UAV dataset shows that O-VIB achieves high-precision localization under stringent bandwidth budgets. Code and dataset will be made publicly available: github.com/fangzr/TOC-Edge-Aerial.

Integration Flow Models

Apr 28, 2025Abstract:Ordinary differential equation (ODE) based generative models have emerged as a powerful approach for producing high-quality samples in many applications. However, the ODE-based methods either suffer the discretization error of numerical solvers of ODE, which restricts the quality of samples when only a few NFEs are used, or struggle with training instability. In this paper, we proposed Integration Flow, which directly learns the integral of ODE-based trajectory paths without solving the ODE functions. Moreover, Integration Flow explicitly incorporates the target state $\mathbf{x}_0$ as the anchor state in guiding the reverse-time dynamics. We have theoretically proven this can contribute to both stability and accuracy. To the best of our knowledge, Integration Flow is the first model with a unified structure to estimate ODE-based generative models and the first to show the exact straightness of 1-Rectified Flow without reflow. Through theoretical analysis and empirical evaluations, we show that Integration Flows achieve improved performance when it is applied to existing ODE-based models, such as diffusion models, Rectified Flows, and PFGM++. Specifically, Integration Flow achieves one-step generation on CIFAR10 with FIDs of 2.86 for the Variance Exploding (VE) diffusion model, 3.36 for rectified flow without reflow, and 2.91 for PFGM++; and on ImageNet with FIDs of 4.09 for VE diffusion model, 4.35 for rectified flow without reflow and 4.15 for PFGM++.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge